Don't ask writing quality code

Four years ago, I complained about people who are asking to write good code in a context where the company culture itself leads to bad code in the first place. I explained the necessity of RCA, and the uselessness of individual suggestions to write quality code.

It is time now to expand on the subject.

Culture of quality is a curious beast, and is not easy to tame. It is essentially impossible to obtain quality on simple request. Instead, quality should be nurtured and should become the inherent part of the culture of the company. A few companies I consulted over the years had a curious approach to quality: from marketing perspective, they were quality-driven, ans so were their interviews. They were also expecting the interviewees to put an emphasis on quality, and employees themselves were expected to make it look like the quality matters.

There are lots of ways to make it look like you know anything about quality when you work as a developer. This is a non-exhaustive list of the techniques:

Every time you see someone else's code (preferably someone who left the company long ago), complain in a terms such as: “Why, but why is is written this way?! How could anyone do such a thing?!”

Enforce superfluous rules, such as a coding style. Possibly go through the whole code base, “fixing” code, and then tell that you spent the past two days doing a very valuable thing of improving the quality. The more time you spend doing that, the better; this also means that you should preferably avoid automation.

Change the code so it “looks better,” whatever that means in a context. You don't need to be able to explain the value of your change, because anyone who has any taste of quality should be able to understand the beauty of your code. By the way, you don't have to limit yourself to the parts of the code you touch in a context of a feature you're working on; it may be anywhere in the code base.

Be particularly pedantic. If the person you're talking with doesn't know the difference between a function and a method, a URI, a URL and a URN or can't explain the relation between a class, an object and an instance of a class, shake your head in despair and promise to explain him later those basic things (do check for them on the Internet after that, otherwise you'll be embarrassed if the person asks you for explanation over a cup of coffee).

Look techy. This means that you should be able to know every new technology which is creating a hype, talk dirty technically speaking, and try anything fashionable, such as a new testing framework, preferably on your companies' project, preferably during your work time.

Change the structure of the project on the grounds that there is at least someone in the team who understands the application design. Another benefit of a structural change is that it will often make it difficult to track the changes through version control history, and make you the author of most of the files.

Many programmers do know and use on regular basis those techniques. Companies where the inherent culture is to look quality-driven will attract more of those programmers, and encourage this behavior in the ones who don't have it originally.

The unfortunate aspect of quality is that it is easy to mingle real quality and something which pretends to have quality in it. It happens with the products we buy—often a product which looks solid at the beginning appears to be made of cheap components. In the case of products, this problem can be mitigated by acquiring more knowledge. For example, I made a mistake a few years ago to buy an UPS from Infosec. Now that I took apart several UPSes from several companies and know a bit better what makes a good UPS, I won't fall into the same trap.

But the culture of quality can hardly be compared to a technical quality of the components used in a device. So what do we do to ensure that quality work is produced, if marketing quality to employees has a such disguised effect?

For simpler things, it could come to money. For instance, if you develop a product which needs to be secure, just throw more money at security. If interaction design of your product matters, use the money leverage to hire better designers who will spend more time working on your product. Need reliability? More money will help buying more servers and hire people who are specialized in reliability aspects of a software product. Need performance? Money can get you that, too.

But you can't exchange money for quality in a straightforward manner. You can't simply pay extra to those of the developers who make it look like they care about quality. The only thing you will get in response is more behavior like the one I described above.

So here it comes, the question of an objective evaluation of the quality of a product. I already considered this subject in the past, and emphasized the importance of a valuation over a metric and the one of a difference between the perception of the code quality by the programmers themselves and the actual quality of the code base. In the same article, I explained why the evaluation of the code quality by its authors is irrelevant when it comes to identifying the quality of the code.

If quality cannot be measured directly, there are ways to do it indirectly, ways which translate into objective metrics such as the number of issues reported for a period of time, correlated with the number of releases, the velocity of the team and the number and size of features for the same period of time. Here, the quality of code is not evaluated directly; the metric rather depends on the code quality which preceded the period of time, as well as reflects the contextual quality of work of the developers.

Another indirect metric would be the average time needed for new developers to become productive. For products which are at the higher end of the quality scale, this is a matter of days; notice that the size of the product is irrelevant (the domain complexity, however, is a factor to take in account).

A series of counters can be set in a way that, combined, they will make high quality code blossom, without encouraging the developers to make them look quality-driven by some devious means. Combined is the important word here: no simple metrics will produce quality.

Simple, non-systemic metrics produce the behavior I described at the beginning of the article. Or when they don't, they encourage behavior which is not particularly fruitful. For instance, measuring the compliance of a code base to style rules is very simple. But could you imagine a metric which would tell you how well design patterns were applied, and whether they were applied only in locations where one needs them, or not?

Example

A few months ago, I helped a colleague setting up such system. Ex-developer, he was a project manager of a team of six developers. The quality suffered, and basic encouragement to write quality code wouldn't have any effect. My colleague supposed that the low quality is due to the fact that the developers work under pressure, but when he achieved to lower the pressure, the quality remained low.

We started by designing together a system containing twenty-six metrics; some were gathered automatically on every commit or every night. Some were designed to be a result of regular team meetings, collected by averaging the notes given by developers themselves. Some were very simple; others—quite complex to understand and to gather. The whole system was shared with the team, and a few meetings ensured that everybody understand those metrics and is happy with them. At the issue of those meetings, four metrics were dropped at the request of some team members.

The next step was to chose the reinforcement mechanism. Money was out of question—French culture and laws make it practically impossible to reward excellence among employees—so instead, I suggested to try to play on reputation; developers seemed slightly demotivated, but quite competent, so simple reputation within the team could work, unlike with teams where programmers view their job as a bare mean to obtain money.

Finally, RCA helped understanding some of the reasons developers were not producing quality code. One of the reasons was that although everyone claimed knowing SOLID principles, they seemed to have never seen code which followed SOLID principles outside very basic examples from the books. Showing those principles in practice to them was a huge boost. In the same way, it appeared that nobody was really familiar with design patterns, and nobody could really claim a role of a software designer—after all, they were developers, and were hired as developers, not designers. I helped my colleague to spot the basic issues with the current design, and the company is preparing to hire a designer to fix the remaining problems.

Over the past two months, metrics showed an increase in quality. No, it's not an increase from 5.92 to 9.27, or whatever digits you put instead; it is rather an increase from “has severe issues” to “you've got issues” from the article I already quoted above. If they don't screw up the hiring of the software designer, I'm sure they will quickly move to “minor concerns.”

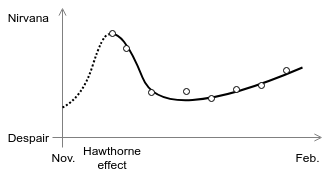

A nice aspect of that is the happiness of developers themselves which boosted right after we discussed the metrics and started deploying them, which is a direct consequence of Hawthorne effect, but also increased over time. The happiness itself was measured from some of the twenty-two metrics, including:

- The cross-esteem metric which consisted for every member to indicate during the daily meeting if he thinks that he did something valuable yesterday, and that the five other members did something valuable.

- The “this team is cool” metric which consisted of rating every week how much the five other members seemed happy with their work.

- The Friday's motivation metric: “I want so much this week to end!” My favorite.

Figure 1 The happiness of the developers. The dashed part of the curve corresponds to the part prior to the collection of the metrics, and corresponds to a sloppy and unscientific representation of the impression we got from the developers themselves, as well as the impression of the project manager on the overall mood of the team. This is not how you should collect data.